A Landscape of AI-discovered Molecules and Target Novelty Analysis

ALSO: Weekly highlights; An AI Model to Predict RNA Expression; How This Company is Building a New Framework for Clinical Trials; Notable Trends and Companies to Follow in Neuroscience

Hi! I am Andrii Buvailo, and this is my weekly newsletter, ‘Where Tech Meets Bio,’ where I talk about technologies, breakthroughs, and great companies moving the biopharma industry forward.

If you've received it, then you either subscribed or someone forwarded it to you. If the latter is the case, subscribe by pressing this button:

Now, let’s get to this week’s topics!

Weekly tech+bio highlights

💰 Regeneron announces venture investing plans, committing $100 million annually for five years to fund therapeutics, medical devices, and health tech tools, as it continues to showcase its entrepreneurial spirit with a market cap near $100 billion.

🚀 Novo Nordisk launches a new AI research facility in London's Knowledge Quarter, focusing on integrating Artificial Intelligence and Machine Learning into drug discovery. This Digital R&D Hub aims to accelerate target identification, molecule design, and clinical outcome predictions through AI, enhancing their drug development process.

🚀 BenevolentAI, a pioneering AI biotech with a recent history of setbacks, announces a significant boardroom reshuffle effective May 2, following founder Kenneth Mulvany's plans to rejoin as deputy chair alongside new independent directors, aiming to enhance governance and future value creation.

🚀 Nvelop Therapeutics launches with $100M in seed funding, introducing two innovative in vivo delivery approaches for gene therapy, developed by gene editing experts David R. Liu and J. Keith Joung. These platforms aim to safely and effectively deliver genetic medicines, potentially transforming treatments for currently unreachable patient populations.

💰 US-based Canaan Partners boosts its biotech investment pool by $100 million, following an $850 million fund last year, with plans to focus on early biopharma company formation and venture series rounds. The firm also welcomes Pfizer veteran Uwe Schoenbeck as a venture partner to strengthen its biotech investment strategy.

🔬 Aspen Neuroscience announces the dosing of the first patient in the ASPIRO Phase 1/2a trial, evaluating ANPD001, an autologous dopaminergic neuron cell replacement therapy for moderate to severe Parkinson's disease, marking a step in personalized regenerative neurologic therapies.

💰 Bruker acquires NanoString Technologies, a spatial biology company, for $392.6 million. NanoString, known for its RNA and protein analysis technology, filed for bankruptcy in February before the acquisition.

💰 23andMe CEO Anne Wojcicki plans to take the company private, as revealed in a recent securities filing. Despite the company's struggles with its dual role in consumer genetics and drug development since its 2021 SPAC public offering, Wojcicki is exploring financing options and is not considering alternative transactions.

💰 Corner Therapeutics raises $54 million in Series A funding to advance new immunotherapies for cancer and infectious diseases, led by healthcare veteran Steve Altschuler. The startup plans to enter the clinic with programs targeting influenza and HIV, focusing on novel dendritic cell activation platforms.

🔬 Insilico Medicine discovers a new class of orally bioavailable Polθ inhibitors for BRCA-deficient cancers, using generative AI for precise molecular modifications. Demonstrating robust potency and promising druglike properties, this breakthrough, published in Bioorganic & Medicinal Chemistry,

An AI Model to Predict RNA Expression in Whole Slide Images

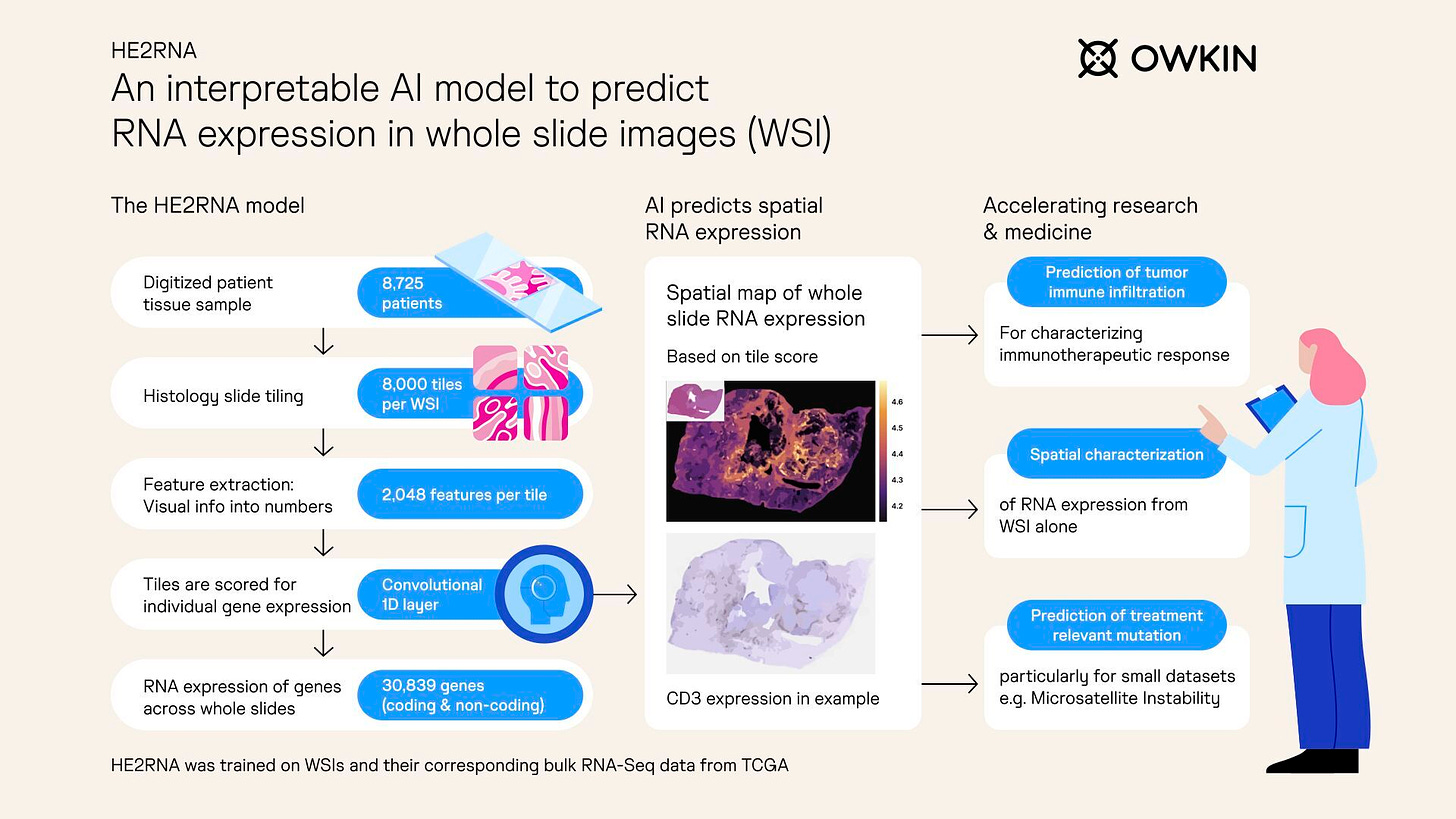

I’d like to highlight today HE2RNA, an innovative AI model that predicts RNA expression from whole slide digital images. This tool is built by the Paris- and New York-based AI company Owkin.

Designed for interpretability, HE2RNA serves as a virtual spatialization tool, generating heatmaps to visualize the expression of any gene.

This AI model is adept at predicting tumor immune infiltration, detailing the spatial expression of RNA, and identifying treatment-relevant mutations in diseases.

Unlocking Interoperability with AI: How This Company is Building a New Framework for Clinical Trials

(Disclaimer: this interview is sponsored by Trialize)

In an industry as critical as clinical trials, where efficient data management and swift processes are paramount, outdated tech methods often create organizational barriers. Digital tools, data management systems, and analytical dashboards are generally ways to improve clinical trial design and operational efficiency.

In fact, statistical surveys reveal tangible advancements in efficiency and technological integration within clinical trials. The rapid adoption of digital tools, including electronic Investigator Site Files (eISF) and electronic consent (eConsent), has increased markedly, with usage climbing from 61% in 2020 to 89% by 2022 among clinical trial sites and sponsors.

Additionally, 74% of sponsors surveyed in that study were shown to utilize remote monitoring, anticipating this to reach 91% by the end of 2022, highlighting a substantial shift towards digital oversight.

However, the unprecedented advent of large language models over the last couple years presented novel opportunities to improve clinical trial design.

That's why I sat down with Leo Stoianov, CEO of Trialize, a company at the forefront of redefining clinical trials through innovative technology.

We discussed the still substantial challenges that clinical trials face today and how Trialize is addressing these through automation and artificial intelligence, transforming months-long preparations into a matter of days.

Leonid shared insights into how their integrated platform not only speeds up processes but also enhances data integrity and simplifies the user experience across various functionalities such as EDC, ePRO, and more.

This conversation delves into the promise of new technologies in making clinical trials more efficient, accessible, and ultimately more successful in delivering timely medical innovations.

Leonid, could you share the journey that brought technology and clinical trials together for you? What sparked the idea to innovate in a field known for its cautious pace of change?

I've learned the need the hard way. During my time managing a software engineering department in a CRO, we had many sleepless nights trying to integrate various e-clinical systems, EHRs, LIMs, and BI. We did a lot by introducing cutting-edge MetaData Repositories and Statistical Computing Environments, but eventually, I realized we weren't addressing the root cause, but rather trying to duct-tape what was broken by definition. The industry had solutions built for a different reality, with a different mindset, where the term "interoperability" sounded like a voodoo chant. Meanwhile, a groundbreaking change was happening in healthcare with the introduction of HL7 FHIR.

I realized we had to reimagine and re-engineer the entire process, from designing the data model in a standard but flexible way, to capturing data both clinical and directly from patients, to monitoring and, finally, to analysis. As a software architect, I understood the importance of the concept "don’t break things," especially in our industry.

This led to the creation of Trialize, an integrated platform built atop a new paradigm where the system itself acts more like an opinionated framework rather than a "black box," where AI-enhanced process automation and data models rule, allowing for maximum flexibility while bringing full control over the data and processes.

AI is dramatically reshaping the landscape of many industries, including clinical trials. How do you see AI, particularly through advancements like Large Language Models, transforming clinical trial processes? And could you simplify how these technologies are making an impact for those not deeply familiar with AI?

Absolutely. Imagine your Case Report Forms. Once created, they can be reused from study to study with minor changes. That's what MDRs are used for. LLMs take this further by adding an additional level of "smartness" to the process by producing even better, already adapted CRFs; you just need to "confirm" or "tweak" the remainders.

Imagine EditChecks, which ensure the quality of captured data. That's code. Previously, you had to adapt or rewrite them manually for each study. Now, the process is semi-automatic and AI-assisted; you can generate as AI "interprets" the model you're working on.

Imagine Verification, where previously, people had to do it for each field, or a predefined set of fields for targeted SDV. Now, the system automatically highlights the elements of interest.

Imagine Queries, now systems automatically produce them with loosely defined logic (not hard-coded), as LLMs allow you to identify discrepancies more easily.

Imagine Medical Coding. AutoCoders previously matched with simple logic, like if verbatim + A, B, C was coded to Term X, then other verbatims should be coded alike. LLMs elevate this further by allowing you to find candidate terms for a group of verbatim even if those are not identical.

Imagine Monitoring. Previously, you had to visit sites regularly. Now, you can mix tele-visits with traditional visits and expect a significant overall reduction in the number of visits. How? By getting real-time insights on the processes and data quality happening on sites. You don’t need to wait weeks to produce intel, LLMs could help generate intel for you by writing relevant SQL and building charts. Just prompt in human language what you want to know, what’s important to the success of your trial, and voila, you have what you need at your fingertips, immediately.

Imagine Medical Writing. LLMs are proven to be a good assistant in this exercise by significantly reducing the time needed to produce documents.

And that’s only the tip of the iceberg. The cumulative gains on productivity provided by tailored LLMs are now easy to quantify, which is described in our latest case study here on BPT.

The management of sensitive data within clinical trials is paramount. From your vantage point, what are the cutting-edge strategies or technologies that are enhancing data security and integrity in the face of increasing automation?

So, there are strategies I think are essential for data security reasons:

Do not use general-purpose LLM APIs which you cannot validate or ensure compliance with.

Implement validation policies tailored to address LLM specifics - you might want to update your TQLC and SDLC SOPs to address this.

Keep it in-house within your VPC or work with preferred partners who can ensure industry-specific compliance requirements and share responsibility with you.

Implement an LLM-focused red team system with guards to ensure automated safety and security assessments.

Do not allow LLM to "write" data, instead use it to propose metadata and algorithms by reading what's already there.

The adoption of Agile methodologies marks a significant evolution in clinical trials. What effects do you anticipate this shift having on drug development’s speed and efficiency? Can you point to any instances where this approach has already shown its effectiveness?

Given my technical background, I’ve seen various interpretations of the “Agile” theme. Most misconceptions start to emerge as people realize that Agile is not about “ship it fast, fix issues later”. In our industry, the quality of data directly affects analysis outcomes and whether you’ll receive approval from regulatory bodies. Being “agile” is about being “flexible” and “adaptive”. It involves starting small, having shorter validation cycles, making incremental improvements, and establishing instant feedback loops to inform and embrace change.

This requires a change in systems, processes, and people’s mindsets. Constant change is a painful process as it requires regular mental exercise, consumes energy, and brings a feeling of "uncertainty.” It necessitates a focus on reduction—“less is more”—and a battle to discern what’s essential at the moment while always keeping the long-term goal in sight. Legacy systems were not built to be "flexible.”

Speaking of processes, imagine retraining 1,000 people on new or revised SOPs. That’s why I believe Agile should not be imposed top-down, but rather embraced bottom-up—create a dedicated business unit that encompasses all necessary elements to deliver end-to-end, think of it as an internal enterprise startup, and give them the flexibility to innovate.

Then gradually scale up their success and best practices to the entire organization. To become truly "agile," the entire organization must adopt an entrepreneurial mindset, which is challenging to instill.

Technology adoption in clinical trials isn't just about the data or algorithms; it's deeply influenced by human factors. Could you recount a situation where the human element—whether it's collaboration among teams, relationships between sponsors and CROs, or patient interaction—played a pivotal role in the success or insight gained from a trial?

People always play a pivotal role. They are the ultimate decision-makers and outcome influencers. Systems and processes can only support or hinder their efforts. It’s a matter of mental energy consumption. If you’re constantly battling with the system or process, it diminishes your chances of success. The best process is the one you hardly notice because it functions so seamlessly. To keep people motivated, the system should focus on reducing everything, especially repetitive tasks, while providing a pleasant user experience. If everything goes smoothly, you’ll rarely even mention it; it’s taken for granted.

As we look to the future, what do you believe are the next big technological breakthroughs or trends that will further revolutionize the clinical trials space? How might organizations, possibly including your own initiatives, need to adapt to stay at the forefront of these changes?

We are still unable to test on digital twins effectively: the quality data isn’t there yet, and the models are far from perfect. We can’t automatically produce Statistical Analysis Plans (SAPs) out of clinical protocols, just assist in writing them. While we’ve made significant progress with SDTM generation from raw data, generating ADaM datasets is still a far reach. QA/QC tracks, while being AI-assisted, still require considerable improvement. The ultimate breakthrough will occur when we can conduct a completely “synthetic trial” to predict outcomes before starting the actual study on humans.

Innovating within highly regulated sectors like clinical trials comes with its own set of challenges. Reflecting on your journey, what obstacles have you encountered, and what key lessons would you share with those looking to bring new technologies into this space?

Throughout the long history of industry maturation, many best practices and established processes have emerged. Along with these, we've inherited significant inefficiencies, concerning levels of bureaucracy, and internal politics that consume precious resources and slow down the industry. The focus has shifted from delivering innovative treatments to battling internal demons. That’s why most innovation now comes from acquiring startups rather than fostering in-house development.

It's essential to maintain enthusiasm and productivity in a professional environment burdened by excessive meetings and paperwork. We have studies that are at various stages of automation, including one that is close to what we jokingly call “automation singularity,” where a very small team at a CRO successfully managed a complex multi-site Phase II trial that was originally failing so badly the sponsor considered canceling it.

The key is mutual willingness from both sponsors and CROs. Sponsors need to be aware of modern approaches, and CROs need to embrace the newfound flexibility provided by emerging technologies.

Read Trialize’s technical case study: How AI and Automation Reduced Study Build Time from Months to Days

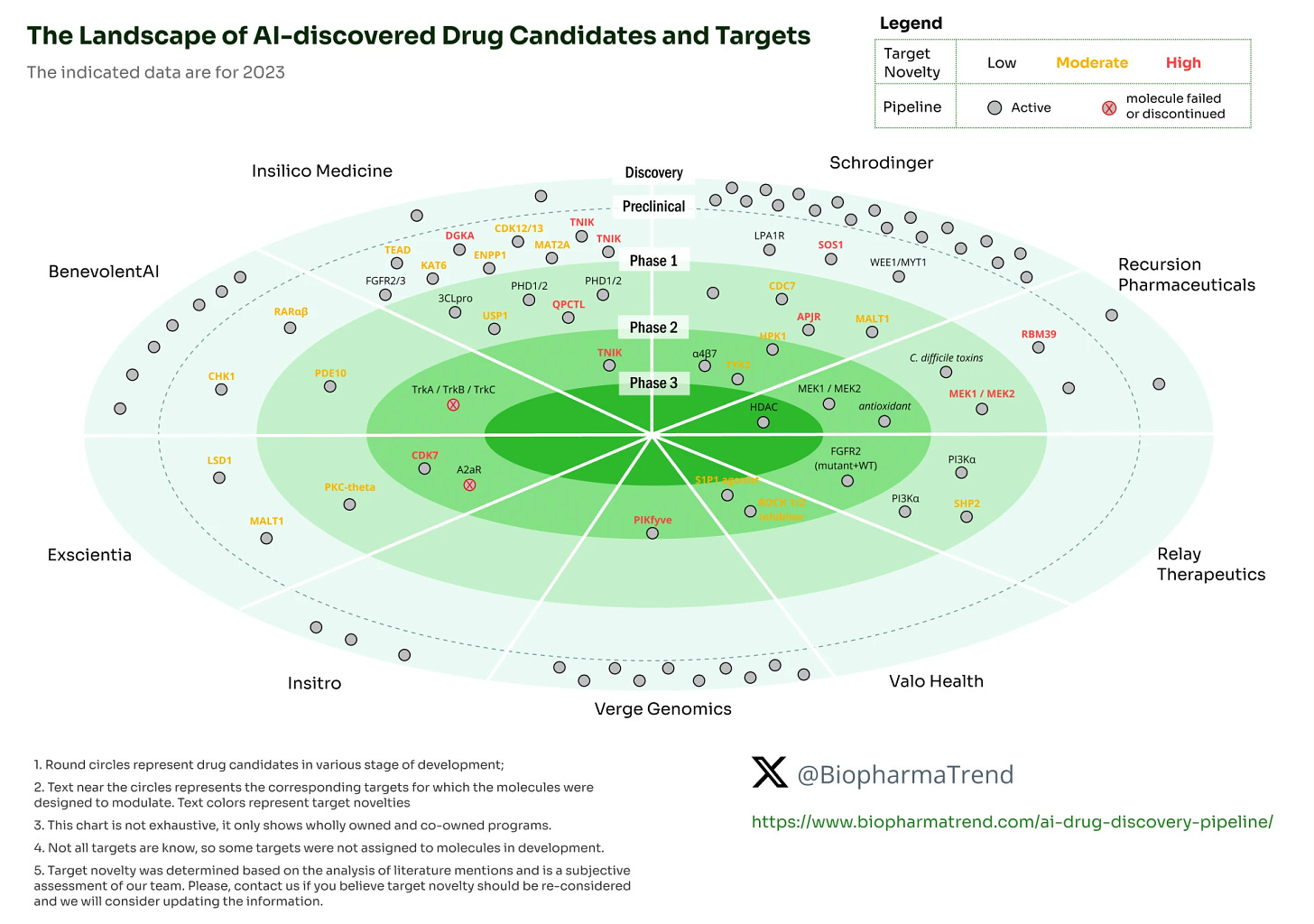

A Landscape of AI-discovered Molecules and Target Novelty Analysis

We have recently launched a report, “It’s Been a Decade of AI in the Drug Discovery Race. What’s Next?” where we gathered historical data about drug pipeline progression over the years for the selected AI-driven drug discovery platforms.

Those included BenevolentAI, Exscientia, Insitro, Insilico Medicine, Recursion, Relay Therapeutics, Schrödinger, Verge Genomics, and Valo Health.

Now, we’ve gone a step further and attempted to analyze novelties of targets that the above companies are pursuing for their leading drug programs.

Some of the targets were highly novel and even AI-discovered, like in the case of Insilico Medicine, Recursion Pharmaceuticals, Schrodinger, and Verge Genomics.

In other cases, targets were moderately novel, or even very old and well-known.

This target novelty evaluation is an ongoing study, so we might be further adjusting the novelty evaluation methodology for the upcoming research paper.

The Excel file with the data for the target novelty and AI-discovered programs is available for download below (for paid subscribers).

Next up: Notable Trends and Companies to Follow in Neuroscience